We’re proud to announce that the 2021 class of OpenAI Scholars has completed our six-month mentorship program and have produced an open-source research project with stipends and support from OpenAI.

Working alongside leading OpenAI researchers that created GPT-3 and DALL·E, our Scholars explored topics like AI safety, contrastive learning, generative modeling, scaling laws, auto-encoding multi-objective tasks, test time compute, NLP segmentation strategies, and summarization from human feedback.

To wrap up the program, our nine Scholars share their work and how the Scholars Program has impacted their careers. Read more about each of them and their projects below.

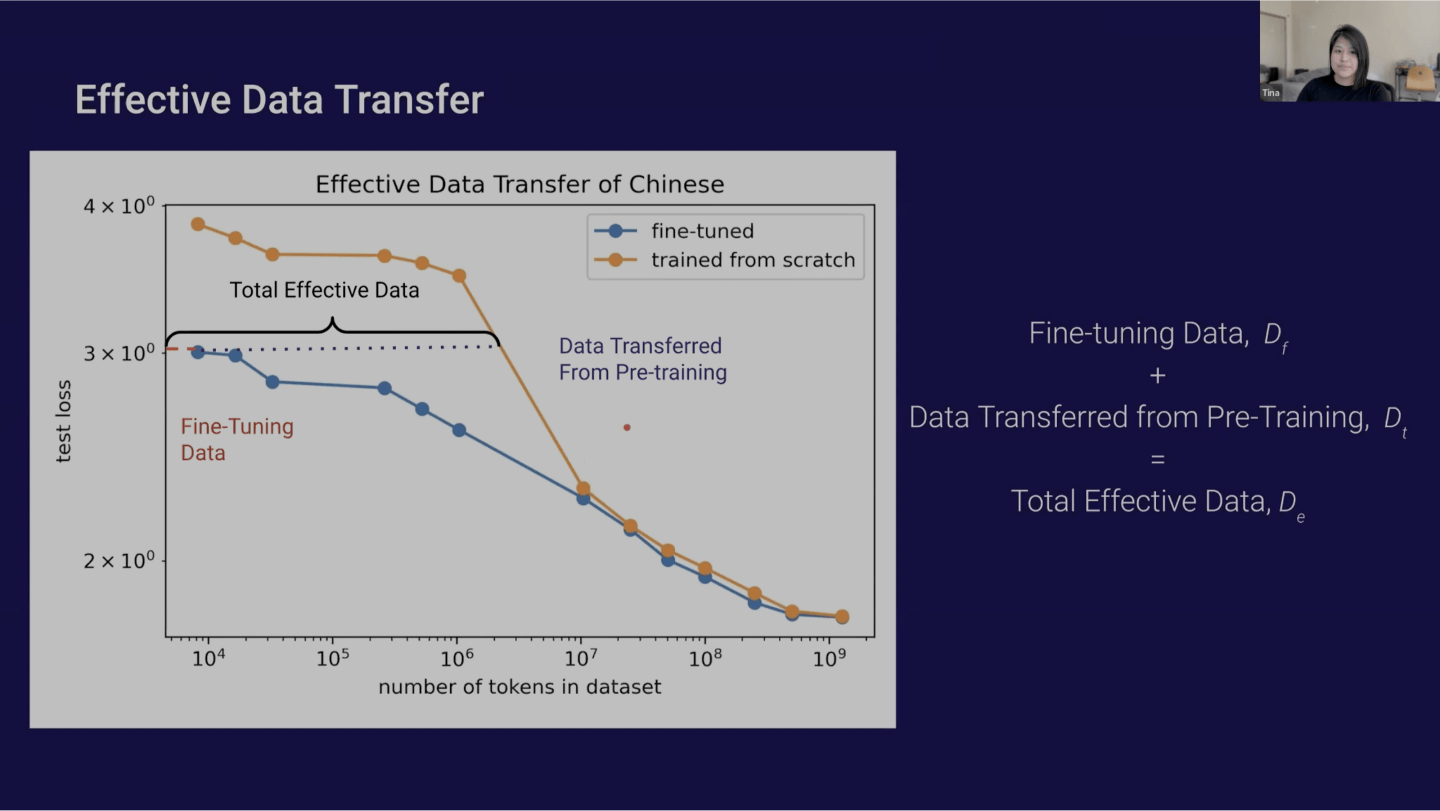

Christina Kim

Mentor: Jerry Tworek

Social links for Christina Kim

Scaling Laws for Language Transfer Learning

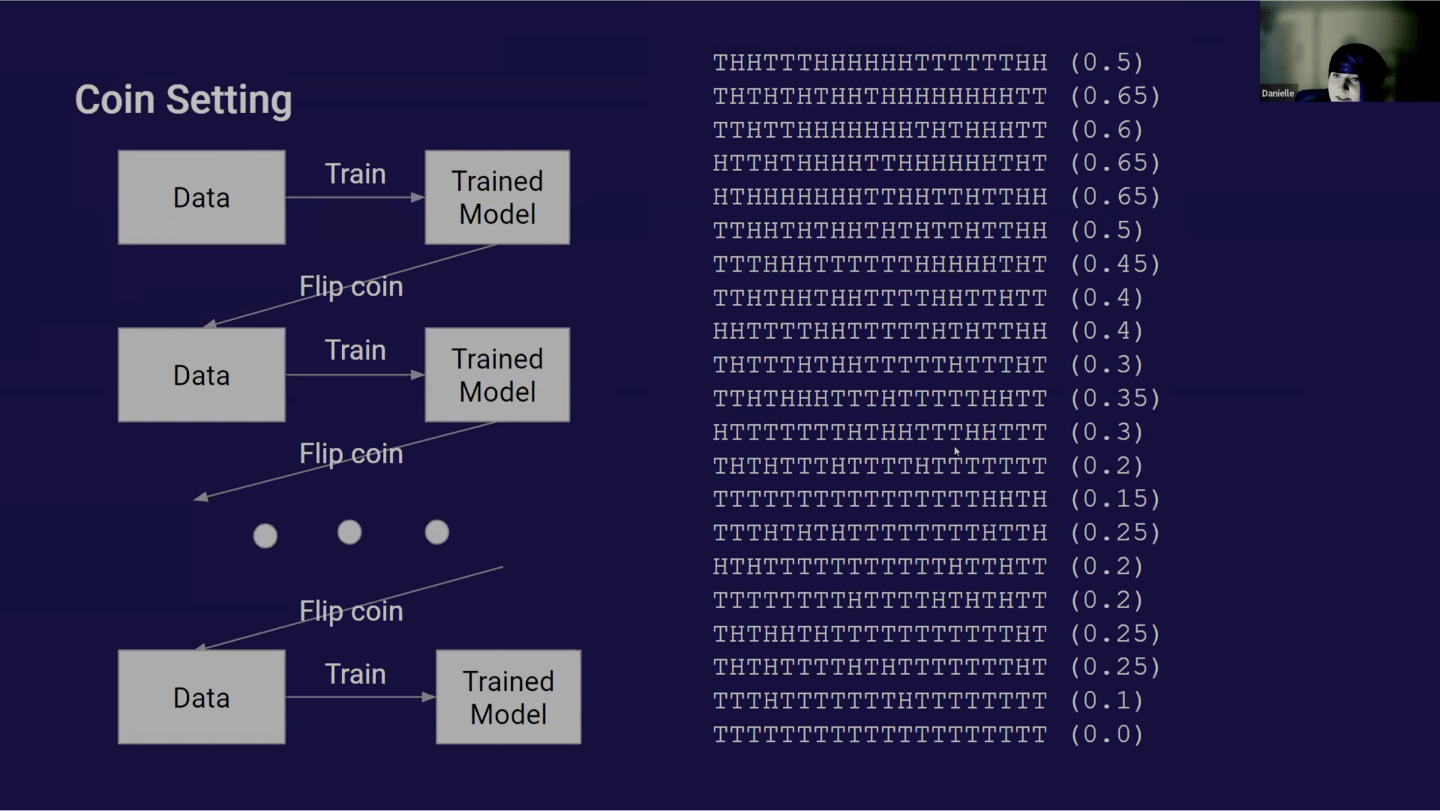

Danielle Ensign

Mentor: Jeff Wu

Social links for Danielle Ensign

Feedback Loops in Opinion Modeling

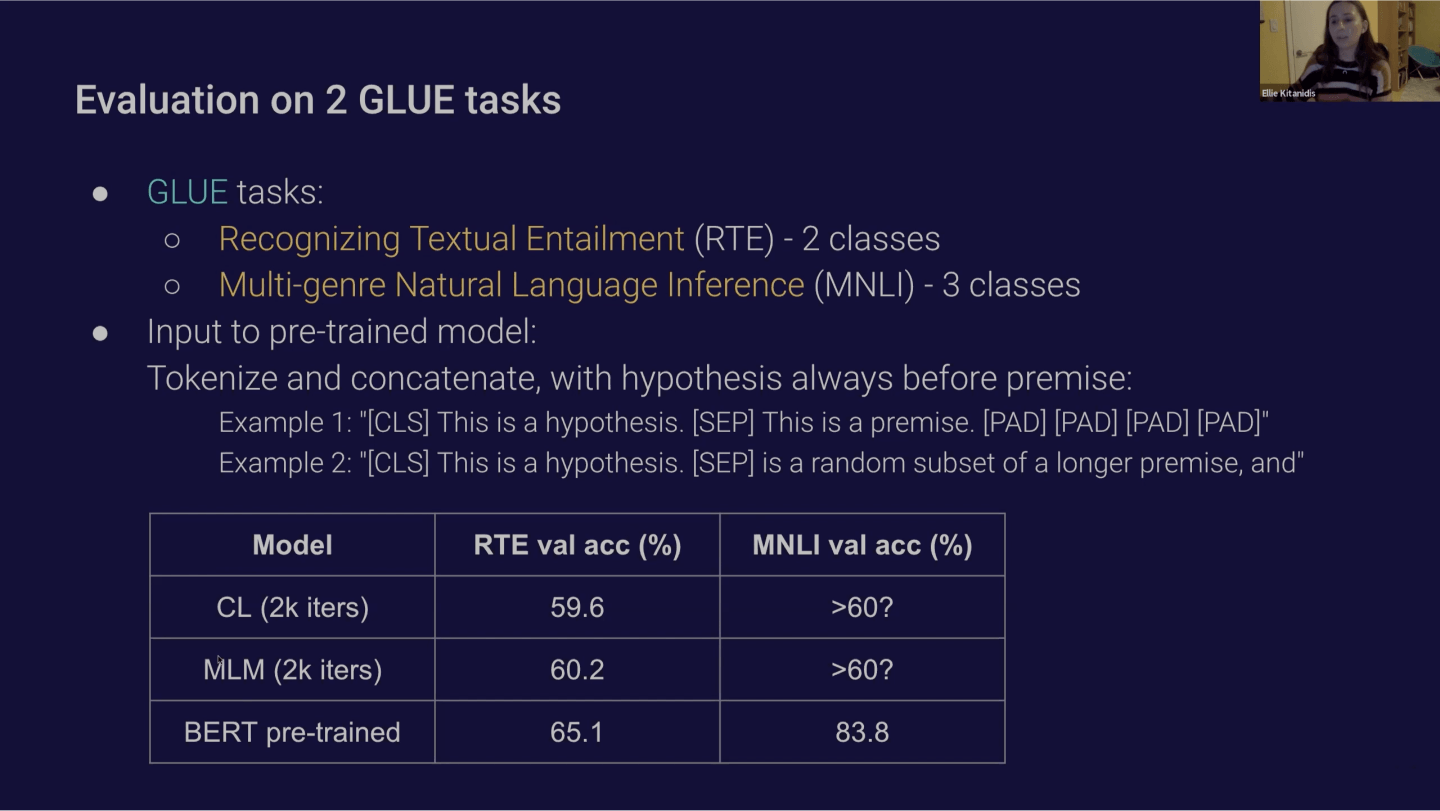

Ellie Kitanidis

Mentor: Pranav Shayam

Social links for Ellie Kitanidis

Contrastive Language Encoding

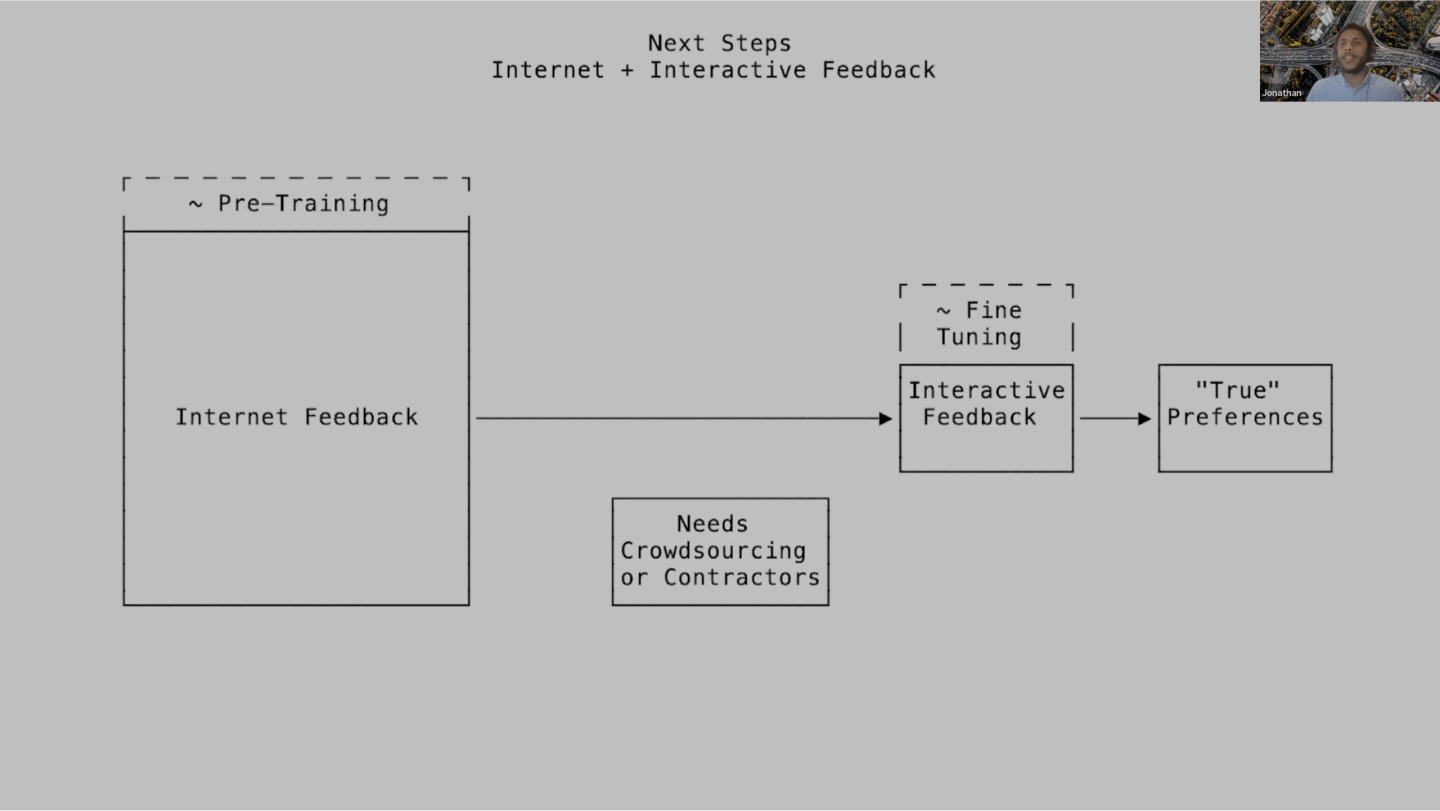

Jonathan Ward

Mentor: John Schulman

Social links for Jonathan Ward

Large Scale Reward Modeling

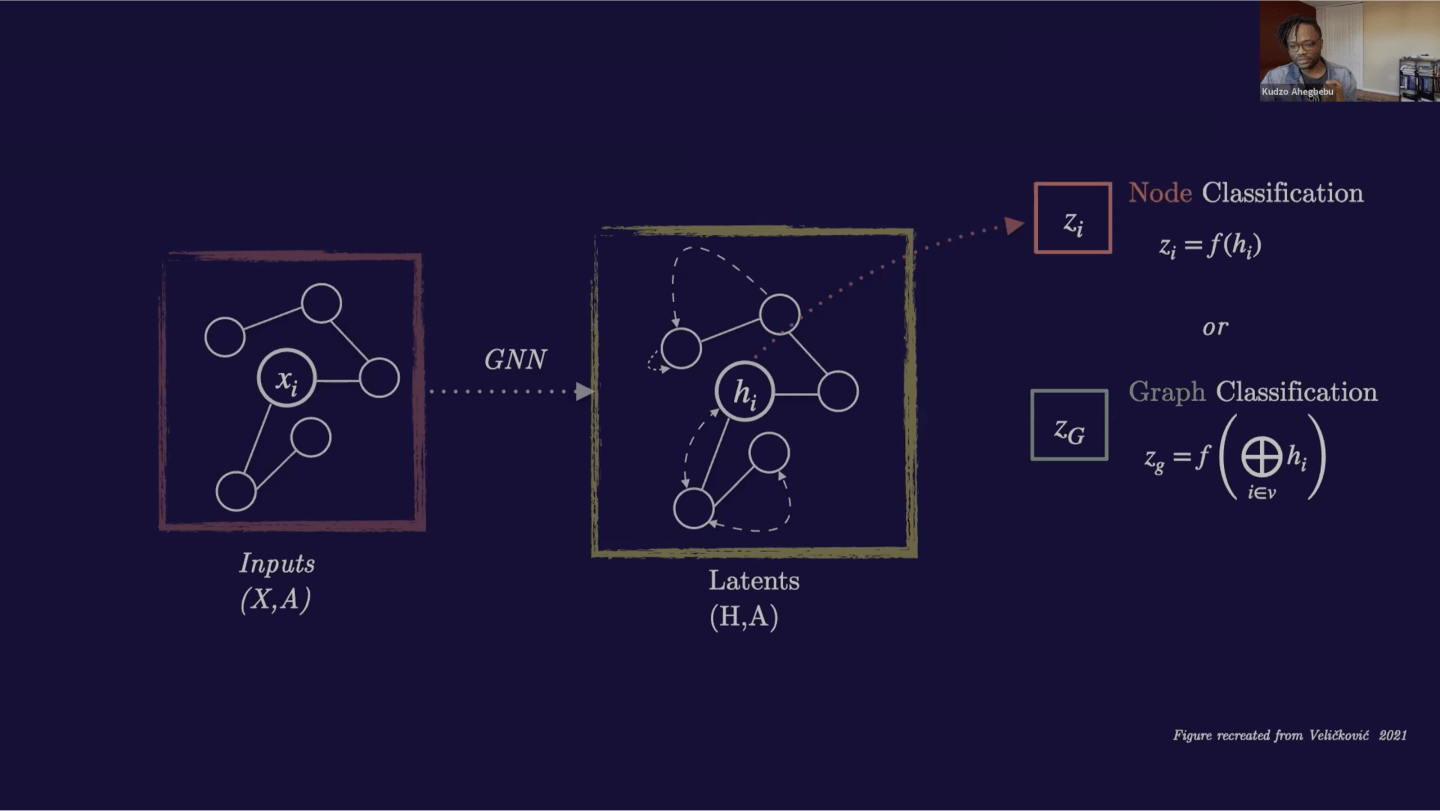

Kudzo Ahegbebu

Mentor: William Guss

Social links for Kudzo Ahegbebu

Characterizing Test Time Compute on Graph Structured Problems

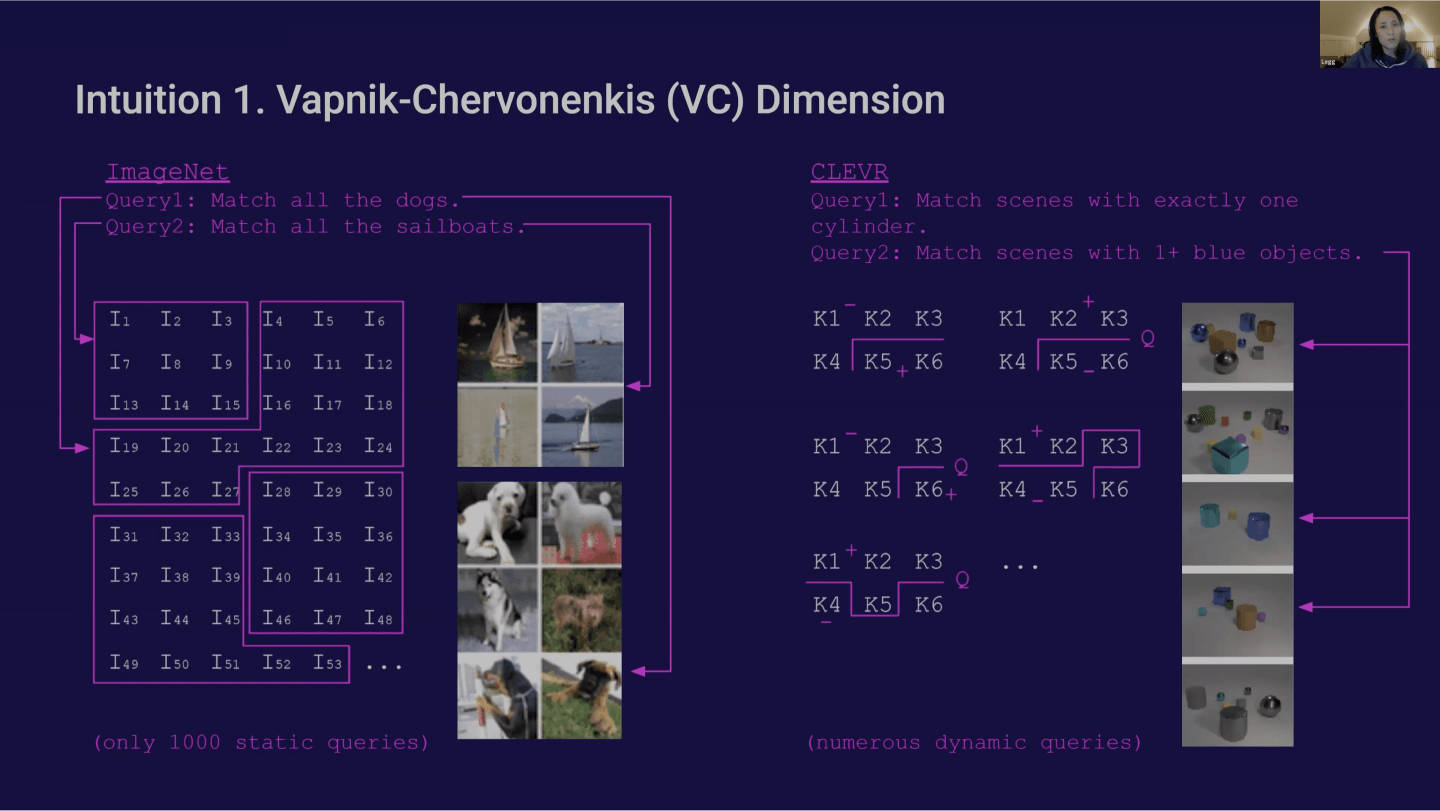

Legg Yeung

Mentor: Gabriel Goh

Social links for Legg Yeung

Breaking Contrastive Models with the SET Card Game

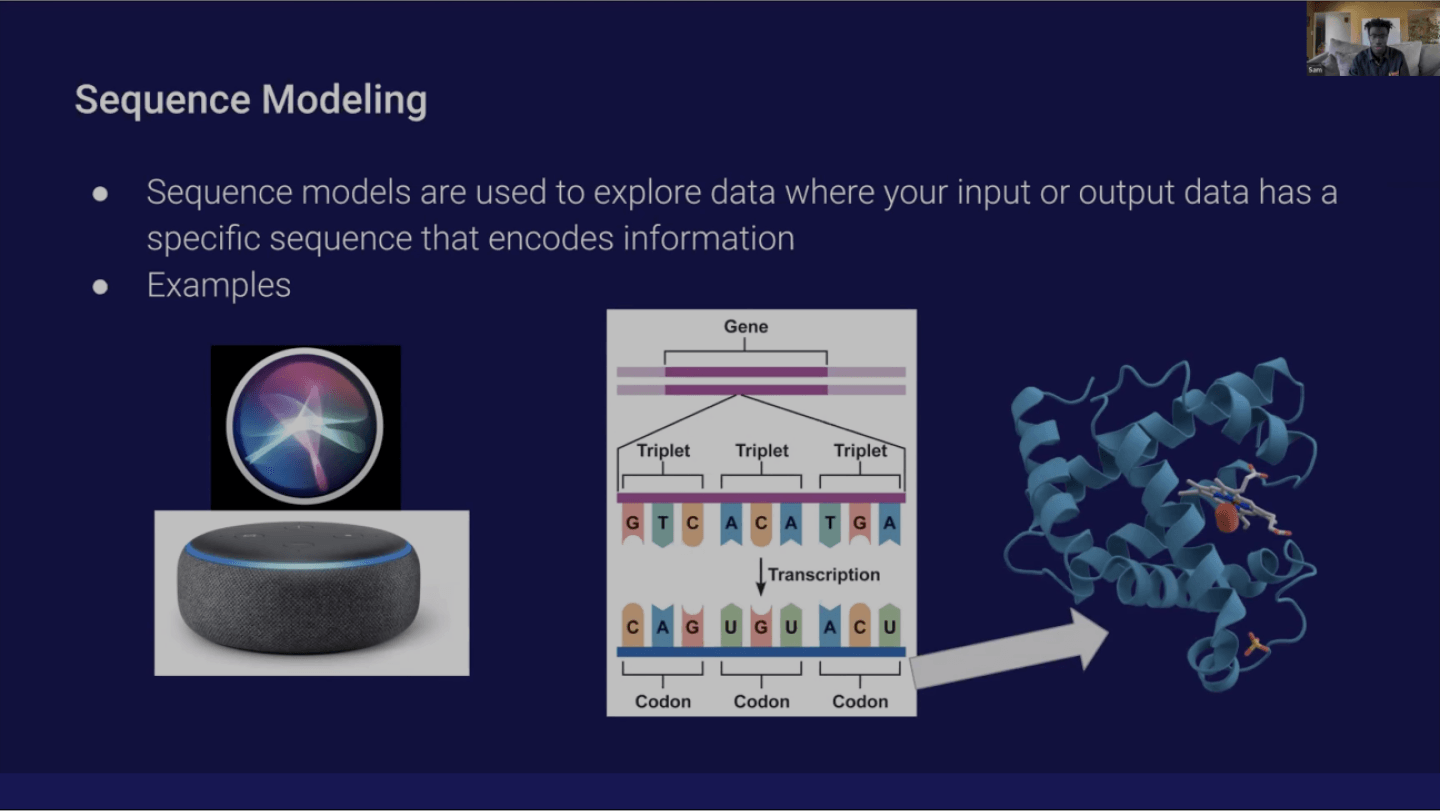

Sam Gbafa

Mentor: Arvind Neelakantan

Social links for Sam Gbafa

Words to Bytes: Exploring Language Tokenizations

Shola Oyedele

Mentor: Alex Ray

Social links for Shola Oyedele

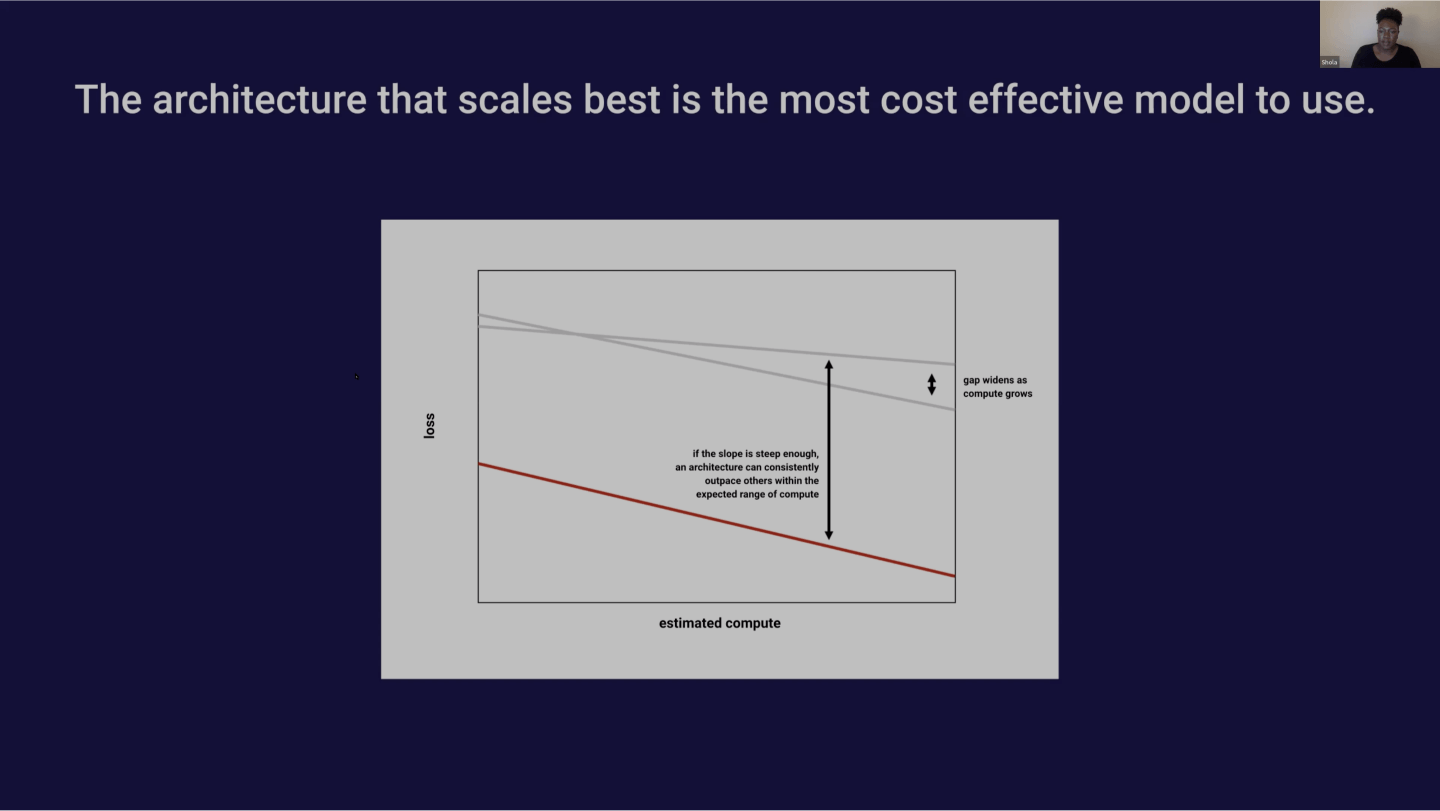

Studying Scaling Laws for Transformer Architecture Variants

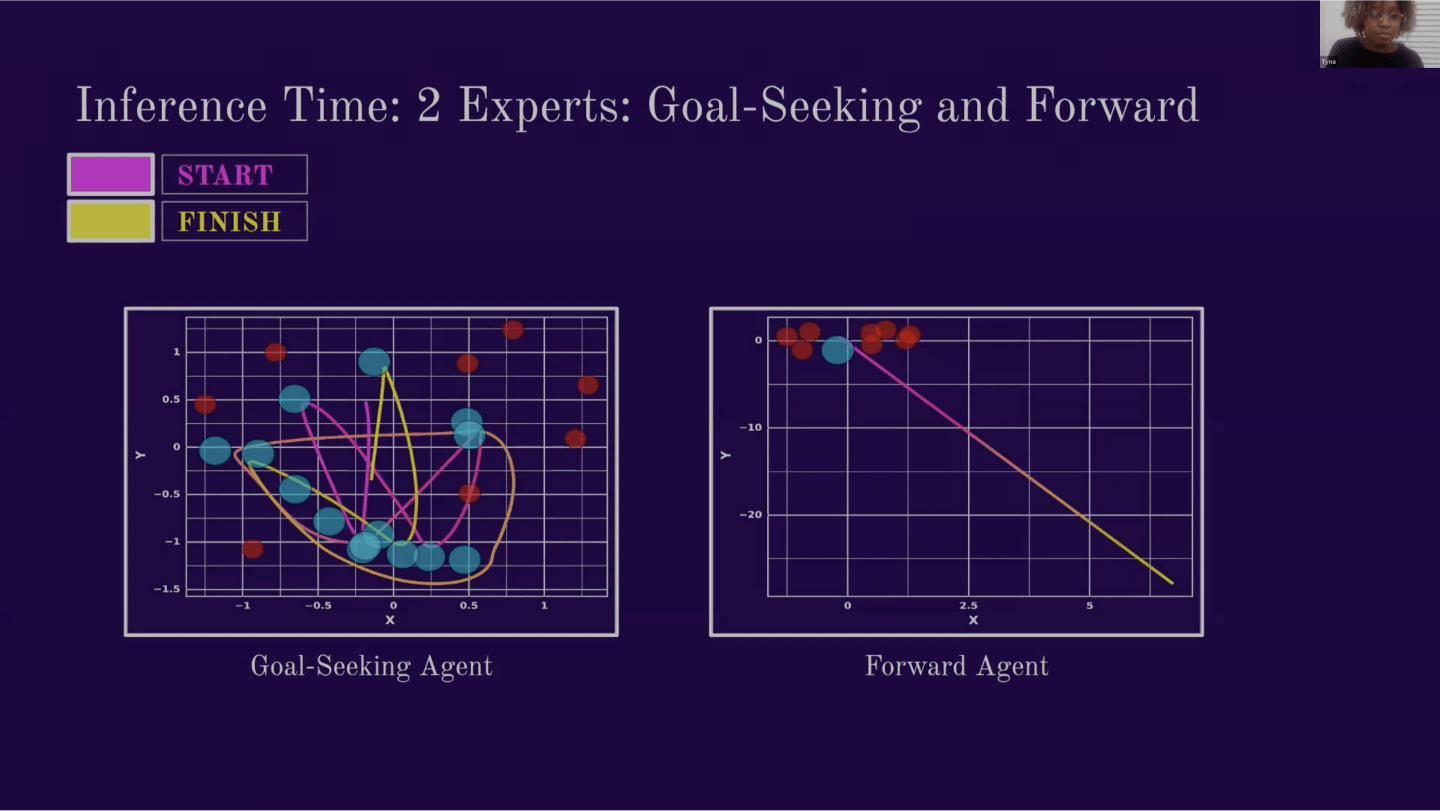

Florentine (Tyna) Eloundou

Mentor: Joshua Achiam

Social links for Florentine (Tyna) Eloundou

Learning Multiple Modes of Behavior in a Continuous Control Environment